Data Engineering Integration

- Data Engineering Integration 10.2.2

- All Products

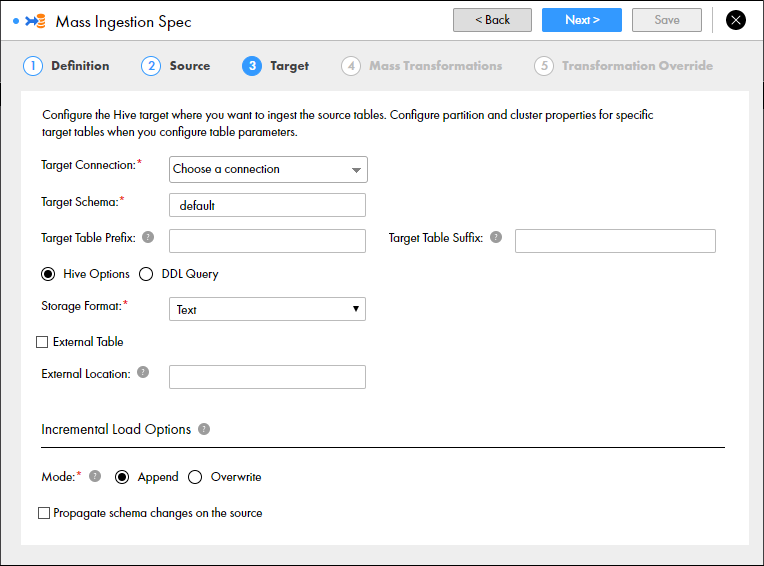

Property | Description |

|---|---|

Target Connection

| Required. The Hive connection used to find the Hive storage target.

If changes are made to the available Hive connections, refresh the browser or log out and log back in to the Mass Ingestion tool.

|

Target Schema

| Required. The schema that defines the target tables.

|

Target Table Prefix

| The prefix added to the names of the target tables.

Enter a string. You can enter alphanumeric and underscore characters. The prefix is not case sensitive.

|

Target Table Suffix

| The suffix added to the names of the target tables.

Enter a string. You can enter alphanumeric and underscore characters. The prefix is not case sensitive.

|

Hive Options

| Select this option to configure the Hive target location.

|

DDL Query

| Select this option to configure a custom DDL query that defines how data from the source tables is loaded to the target tables.

|

Storage Format

| Required. The storage format of the target tables. You can select Text, Avro, Parquet, or ORC. Default is Text.

|

External Table

| Select this option if the table is external.

|

External Location

| The external location of the Hive target. By default, tables are written to the default Hive warehouse directory.

A sub-directory is created under the specified external location for each source that is ingested. For example, you can enter

/temp . A source table named

PRODUCT is ingested to the external location

/temp/PRODUCT/ .

|

Mode

| Required if you enable incremental load. Select Append or Overwrite. Append mode appends the incremental data to the target. Overwrite mode overwrites the data in the target with the incremental data. Default is Append.

|

Propagate schema changes on the source

| Optional. If new columns are added to the source tables or existing columns are changed, the changes are propagated to the target tables.

|