PowerExchange Adapters for PowerCenter

- PowerExchange Adapters for PowerCenter 10.4.0

- All Products

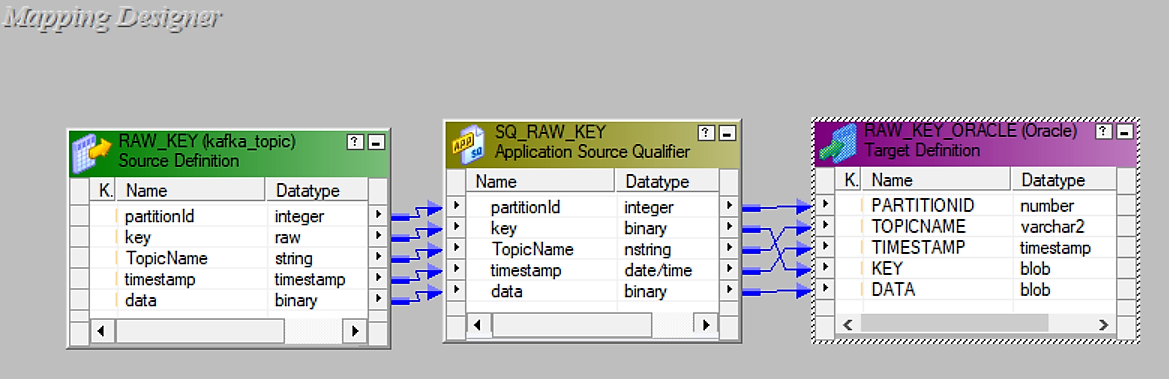

Field Name

| Data Type

|

|---|---|

partitionId

| Integer

|

key

| Raw

|

TopicName

| String

|

timestamp

| Timestamp

|

data

| Binary

|

Field Name

| Data Type

|

|---|---|

PARTITIONID

| Number(p,s)

|

TOPICNAME

| varchar2

|

TIMESTAMP

| Varchar2

|

DATA

| Blob

|

KEY

| Blob

|