PowerCenter

- PowerCenter 10.5

- All Products

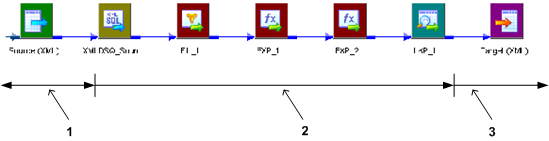

Source Qualifier

(First Stage)

| Transformations

(Second Stage)

| Target Instance

(Third Stage)

|

|---|---|---|

Row Set 1 | - | - |

Row Set 2 | Row Set 1 | - |

Row Set 3 | Row Set 2 | Row Set 1 |

Row Set 4 | Row Set 3 | Row Set 2 |

... | ... | ... |

Row Set n | Row Set (n-1) | Row Set (n-2) |

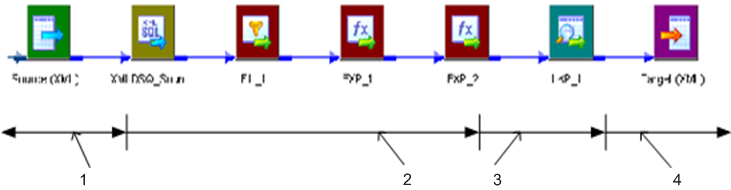

Source Qualifier

(First Stage)

| FIL_1 & EXP_1 Transformations

(Second Stage)

| EXP_2 & LKP_1 Transformatios

(Third Stage)

| Target Instance

(Fourth Stage)

|

|---|---|---|---|

Row Set 1 | - | - | - |

Row Set 2‘ | Row Set 1 | - | - |

Row Set 3 | Row Set 2 | Row Set 1 | - |

Row Set 4 | Row Set 3 | Row Set 2 | Row Set 1 |

... | ... | ... | ... |

Row Set n | Row Set (n-1) | Row Set (n-2) | Row Set (n-3) |